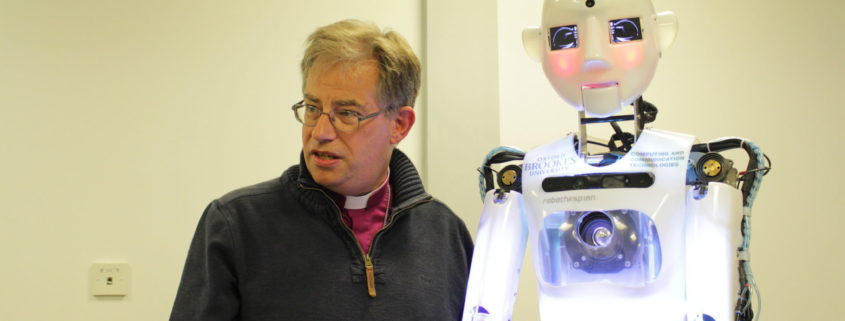

I’ve spent most Tuesday afternoons this term in the House of Lords Select Committee on Artificial Intelligence. We’ve been hearing evidence on every aspect of Artificial Intelligence as it affects business, consumers, warfare, health, education and research.

In the meantime, public interest and debate in Artificial Intelligence (AI) continues to grow. In the last week or so, there have been more news stories about self-driving cars; about Uber’s breach in data, dire warnings from Elon Musk and Hilary Clinton; announcements in the budget about investment in technology and much stealthy marketing of AI in the guise of digital assistants for the home.

The Committee is due to report in April. We are just beginning the process of distilling down all we have heard into the key issues for public policy.

As we begin this process of reflection, these are my top eight issues in AI and the deep theological questions they raise.

- We need a better public debate and education

AI and machine learning technology is making a big difference to our lives and is set to make a bigger difference in the future. There is consensus that major disruptive change is on its way. People differ about how quickly it will arrive. The rule of thumb, I’ve learned, is that we underestimate the impact of change through technology but overestimate the speed.

Public debate and scrutiny is vital. It’s important to understand so that we can live well with new technology, protect our data and identity, and that of our children and grandchildren and ensure technology serves us well. It is also vital to build public trust and confidence. A few years ago, the development of GM foods was halted because public trust and confidence did not keep pace with the technology. Public debate is vital.

- AI and social media are shaping political debate

There is very good evidence that AI and social media used together are shaping the democratic process and changing the nature of public debate. Technology is partly responsible for the unexpected outcomes of elections and referenda in recent years.

AI and social media make it possible for tailored messages to be delivered directly to voters in a personalised way. The nature of public truth and political debate is therefore changing. We are less likely to trust single authoritative sources of news. We listen and debate in silos. There is a wider spectrum of ideas. Those who offer social media platforms are not responsible for the content published there (for the first time in history). There is good evidence that this is leading to sharper, more antagonistic and polarised debate.

- AI will massively transform the world of work

There have been a range of serious studies. Between 20% and 40% of jobs in the economy are at high risk of automation by the early 2030’s. The economic effects will fall unevenly across the United Kingdom. The greatest impact will be felt in the poorest communities still adjusting to the loss of jobs in mining and manufacturing. There is a risk of growing inequality. Traditional white collar jobs in accounting and law will be similarly affected.

The disruption will probably be enough to break the traditional life script of 20 years of education followed by 40 years of work and retirement. We need to prepare for a world in which this is no longer normal. We will need radical new ways of structuring support across the whole of society. Universal Basic Income or Universal Basic Services need to be actively explored. This will be the major economic challenge for government over the next decade.

New jobs and roles will be created in this fourth industrial revolution. The economic prosperity of the country will depend on how seriously we take investment in this area over the next five years. Other economies are making massive investment. The United Kingdom has some of the best research in the world but without continued investment and better education at all levels we will fall further behind the global leaders.

- Education is key to the future

STEM subjects and computer sciences are vital for everyone. But not to the exclusion of the humanities. We need to educate for the whole of life not simply train economic units of productivity. In a world which is uncertain what it means to be human, we need a fresh emphasis on ethics and values.

- Better data is key

There are two ingredients in the development of machine learning: computing power and good data. Government needs to support small and medium enterprises and start up businesses by making both more available: otherwise the major companies who are already ahead are likely to grow their advantage.

There are significant issues surrounding the security and quality of data, particularly in health care, but also huge advantages in making that data available. Some of the major benefits of AI to humanity are likely to come in better diagnosis of disease and in enhancement (not replacement) of treatments offered by practitioners. But the date needs to be of the highest quality to prevent bias creeping into the outcomes.

- Ethics needs to run through everything

AI brings immense potential for good but also significant potential for harm if used solely for profit and without though for the consequences. There are very obvious areas where AI can do immense damage: weaponisation; the sexualisation of machines and the acceleration of inequality.

The very best companies are highly ethical, publish codes of practice and are making a major contribution in this area. But statements of ethical intent, education for ethics and codes of good practice need to be universal.

- We need to grow the AI economy

New jobs and roles will be created in this fourth industrial revolution. The economic prosperity of the country will depend on how seriously we take investment in this area over the next five years. Other economies are making massive investment. The United Kingdom has some of the best research in the world but without continued investment and better education at all levels we will fall further behind the global leaders.

We have some of the best Universities and researchers in the world. But many businesses, branches of local and national government, services and charities have yet to make the transition to a digital economy which is a necessary first step to being AI ready.

- We need great leadership to shape the future

Leadership of developments in AI is currently dispersed and unclear. Developments in AI demand a sustained, coordinated response across government and wider society and clear, ethical leadership alert to both the dangers and the possibilities of AI.

* * * * * * * *

There are some key theological issues here. My list is growing but five stand out:

- What does it mean to be human?

Every advance in AI leads to deeper questions of humanity. As a Christian, I believe God became a human person in Jesus Christ. Our faith has profound things to say about human identity.

- What does it mean to be created and a creator?

A key part of being human from a Christian perspective is understanding that we are part of creation but with the power to create. We need to understand both our limits and our potential. AI encourages humanity to dream dreams but not always to set boundaries.

- Ethics needs to run through everything: truth

We need continually to emphasise the importance of truth, faithfulness, equality, respect for individuals, deep wisdom and the insights which come from human discourse and the whole ethical tradition, deeply rooted in Christianity and in other faiths.

- We need to be alert to increasing Inequality and poverty of opportunity.

The indications are already clear: without intervention, AI is more likely to increase inequality very significantly rather than decrease it. AI needs to be held within a vision for global economics and politics which is deeper and better than free market capitalism.

- There is immense potential for good in AI but also immense potential for harm.

Serious damage can result from the wrong use of data and lives can be distorted. Machines can and will be sexualised which will shape the humanity of those who use them. Weaponisation of AI requires very careful international debate and global restraint.