The ethical questions surrounding the use of AI and data are manifold and large. Sooner or later they all lead back to the question “what does it mean to be a fully human person in a flourishing society in the 21st Century…”

Posts

The Bishop of Oxford, the Rt Revd Steven Croft, spoke in a debate in the House of Lords this afternoon about protecting and representing the interests of future generations in policy making. Bishop Steven spoke on climate chaos, the rise of artificial intelligence and the impact of both on young people’s mental health.

Join Bishop Steven at the Mass Lobby of MPs on 26 June. Full details here: https://www.theclimatecoalition.org/thetimeisnow

“My Lords, I warmly welcome this debate and want to express my appreciation to Lord Bird for his intiative and his proposals. Lord Bird has set out very well the case for a Select Committee and for a Future Generations Commissioner.

The moral case has shifted in recent years. In the Anthropocene era, humanity’s effect on the environment means that that the interests not just of the next generation but every generation beyond that need to be protected in our policy making.

The world is living through deepening environmental catastrophe. The impact of climate change is already severe. It will become worse with each decade and each generation. The world is currently heading for average global warming of 2 degrees and more by 2050. Global net carbon emissions continue to rise. The risks of unforeseen and catastrophic compound effects on the environment increase with every year.

My Lords the two biblical images of hell are a burning planet too hot to sustain life and a rubbish dump. We are in danger of bequeathing both to our children and grandchildren. It is hugely irresponsible – to take short term decisions in the interests of only of the current generation.

I warmly welcome the government’s historic commitment to a net zero carbon economy by 2050 and I congratulate the Prime Minister on naming this goal as a vital part of her legacy. I warmly welcome the government’s international leadership and the bid to host the vital 2020 Climate Summit. These goals need support across Parliament. The voice of those future generations needs to be strengthened in that debate.

Future generations also need to be protected in the rapid pace of technological change. Here I speak as a Board Member of the Centre for Data Ethics and Innovation. The pace of change and the effects of technology on the mental health of the young are significant.

I warmly commend the Information Commissioner’s Office recent guidelines on Age Appropriate Design, which aim to protect the most vulnerable from the predatory big tech companies. I warmly commend the government for bringing forward the Online Harms White Paper. I hope both will be turning points in the development of new technologies which protect rather than exploit the most vulnerable.

We will need in the coming years agility and public leadership in responding to new technologies and data in the areas of health, education, the labour market, smart cities, algorithmic decision making, facial recognition and the regulation of the mining of personal information for commercial gain. The interests of future generations need a voice.

Finally these proposals are so helpful in that they address a decrease in social cohesion across the generations. The APPG on Social Cohesion recently published a comprehensive study on intergenerational connection.

The generations have become increasingly segregated. We can allow that process of drift to continue with serious social consequences. Or we can exercise leadership to build social capital between the generations. Families and faith communities have a vital role to play and are part of the glue which binds generations together. Local government has a role as does business and the third sector. But national government must play its part.

The proposals to give a structured voice to the interests of future generations is warmly to be welcomed. I warmly support Lord Bird’s proposals and hope they will attract the support of the whole House.”

Steven Croft

- Watch Bishop Steven speaking in the debate and follow Bishop Steven on Facebook

Developing Artificial Intelligence in the UK

For the past year, I’ve been a member of the House of Lord’s Select Committee on Artificial Intelligence. The Committee of 13 members received 223 pieces of written evidence and took oral sessions from 57 witnesses over 22 sessions between October and December. It has been a fascinating process.

The Committee’s report is published today. It’s called AI in the UK: ready, willing and able? You can find it on the Committee website.

When I first started to engage with questions of Artificial Intelligence, I thought the real dangers to humankind were a generation away and the stuff of science fiction. The books and talks that kept me awake at night were about general AI: conscious machines (probably more than a generation away if not more).

The more I heard, the more the evidence that kept me awake at night was in the present not the future. Artificial Intelligence is a present reality not a future possibility. AI is used, and will be used, in all kinds of everyday ways. Consider this vignette from the opening pages of the report…

You wake up, refreshed, as your phone alarm goes off at 7:06am, having analysed your previous night’s sleep to work out the best point to interrupt your sleep cycle. You ask your voice assistant for an overview of the news, and it reads out a curated selection based on your interests. Your local MP is defending herself—a video has emerged which seems to show her privately attacking her party leader. The MP claims her face has been copied into the footage, and experts argue over the authenticity of the footage. As you leave, your daughter is practising for an upcoming exam with the help of an AI education app on her smartphone, which provides her with personalised content based on her strengths and weaknesses in previous lessons…

There is immense potential for good in AI: labour saving routine jobs can be delegated; we can be better connected; there is a remedy for stagnant productivity in the economy which will be a real benefit; there will be significant advances in medicine, especially in diagnosis and detection. In time, the roads may be safer and transport more efficient.

There are also significant risks. Our data in the wrong hands mean that political debate and opinion can be manipulated in very subtle ways. Important decisions about our lives might be made with little human involvement. Inequality may widen further. Our mental health might be eroded because of the big questions raised about AI.

This is a critical moment. Humankind has the power now to shape Artificial Intelligence as it develops. To do that we need a strong ethical base: a sense of what is right and what is harmful in AI.

I’m delighted that the Prime Minister has committed the United Kingdom to give an ethical lead in this area. Theresa May said in a recent speech in Davos in January:

“We want our new world leading centre for Data Ethics and Innovation to work closely with international partners to build a common understanding of how to ensure the safe, ethical and innovative development of artificial intelligence”

That new ethical framework will not come from the Big Tech companies and Silicon Valley which seek the minimum regulation and maximum freedom. Nor will it come from China, the other major global investor in AI, which takes a very different view of how personal data should be handled. It is most likely to come from Europe, with its strong foundation in Christian values and the rights of the individual and most of all, at present, from the United Kingdom, which is also a global player in the development of technology.

The underlying theme of the Select Committee’s recommendations is that ethics must be put at the centre of the development and use of AI. We believe that Britain has a vital role in leading the international community in shaping Artificial Intelligence for the common good rather than passively accepting its consequences.

The Government has already announced the creation of a new Centre for Data Ethics and Innovation to lead in this area. The Select Committee’s proposals will support the Centre’s work.

Towards the end of our enquiry, the Committee shaped five principles which we offer as a starting point for the Centre’s work. They emerged from very careful listening to those who came to meet us from industry and universities and regulators. Almost everyone we met was concerned about ethics and the need for an ethical vision to guide the development of these very powerful tools which will shape society in the next generation.

These are our five core principles (or AI Code) with a short commentary on each:

Artificial intelligence should be developed for the common good and benefit of humanity

Why is this important? AI is about more than making tasks easer or commercial advantage or one group exploiting another. AI is a powerful technology which can shape our understanding of work and income and our health. It’s too important to be left to multinational companies operating on behalf of their shareholders or to a tiny group of innovators. We need a big, wide public debate. It’s also vital that as a society we encourage the best minds towards using AI to solve the most critical problems facing the planet. It would be a tragedy if the main fruits of AI were simply better computer generated graphics or quicker ways to order takeaway pizza.

Artificial Intelligence should operate on principles of intelligibility and fairness

This is absolutely vital. There is a striking tendency in AI at the moment to anthropomorphise: to make machines seem human. This looks harmless at first until you begin to consider the consequences. Suppose in a few years time you are unable to tell whether that call from the bank is from an AI or a person? Suppose you apply for a job and the decisions about your application are all taken by a computer?

Suppose that computer is using a faulty data set, biased against you but you never get to know that? There are already a number of chatbots available offering cognitive behavioural therapy. Some of them charge money. Suppose they get better and better and imitating humans. What is to prevent vulnerable people being exploited? Regulation and monitoring is needed not for the first generation of developers (who are mainly very ethical) but for the generation after that.

Artificial intelligence should not be used to diminish the data rights or privacy of individuals, families or communities.

The Cambridge Analytica and Facebook scandals erupted the week after the Select Committee agreed its final report. They underline the need for this principle. Data is the oil of the AI revolution. It is vital to fuel machine learning and wide application of AI. But data also contains the essence of identity and personality. It is fundamental that our data is safeguarded and not exploited.

All citizens have the right to be educated to enable them to flourish mentally, emotionally and economically alongside artificial intelligence.

AI is a disruptive technology. Some jobs will diminish or disappear. New jobs will emerge—but they will be different and probably not there in the same numbers as the jobs we lose. Inequality will increase unless we take positive steps to counter this. The economic predictions are uncertain. It is however absolutely clear that the only way to counter this disruption is education and lifelong learning. That education is not only about reskilling the workforce. There is a universal need for everyone to learn how to flourish in a new digital world. Providing that education is the responsibility of government.

The autonomous power to hurt, destroy or deceive human beings should never be vested in artificial intelligence.

Autonomous weapons are a present reality and a future prospect. This will change warfare for ever. The UK’s position on them is, at best, ambiguous: we use definitions which are out of step with the rest of the world. The Select Committee calls on the government for much greater clarity here and again, for a wider public debate. Deception is already a feature of AI in cyberwarfare and covert attempts to change perceptions of truth and public opinion. Unless we guard values of public truth and courtesy and freedom then our society is vulnerable.

Artificial Intelligence is here to stay. It has the capacity to shape our lives in many different ways. This is the moment to ensure that humankind shapes AI to serve the common good and all humanity rather than allowing AI driven by commercial or other interests to shape our future and our national life.

I’ve spent most Tuesday afternoons this term in the House of Lords Select Committee on Artificial Intelligence. We’ve been hearing evidence on every aspect of Artificial Intelligence as it affects business, consumers, warfare, health, education and research.

In the meantime, public interest and debate in Artificial Intelligence (AI) continues to grow. In the last week or so, there have been more news stories about self-driving cars; about Uber’s breach in data, dire warnings from Elon Musk and Hilary Clinton; announcements in the budget about investment in technology and much stealthy marketing of AI in the guise of digital assistants for the home.

The Committee is due to report in April. We are just beginning the process of distilling down all we have heard into the key issues for public policy.

As we begin this process of reflection, these are my top eight issues in AI and the deep theological questions they raise.

- We need a better public debate and education

AI and machine learning technology is making a big difference to our lives and is set to make a bigger difference in the future. There is consensus that major disruptive change is on its way. People differ about how quickly it will arrive. The rule of thumb, I’ve learned, is that we underestimate the impact of change through technology but overestimate the speed.

Public debate and scrutiny is vital. It’s important to understand so that we can live well with new technology, protect our data and identity, and that of our children and grandchildren and ensure technology serves us well. It is also vital to build public trust and confidence. A few years ago, the development of GM foods was halted because public trust and confidence did not keep pace with the technology. Public debate is vital.

- AI and social media are shaping political debate

There is very good evidence that AI and social media used together are shaping the democratic process and changing the nature of public debate. Technology is partly responsible for the unexpected outcomes of elections and referenda in recent years.

AI and social media make it possible for tailored messages to be delivered directly to voters in a personalised way. The nature of public truth and political debate is therefore changing. We are less likely to trust single authoritative sources of news. We listen and debate in silos. There is a wider spectrum of ideas. Those who offer social media platforms are not responsible for the content published there (for the first time in history). There is good evidence that this is leading to sharper, more antagonistic and polarised debate.

- AI will massively transform the world of work

There have been a range of serious studies. Between 20% and 40% of jobs in the economy are at high risk of automation by the early 2030’s. The economic effects will fall unevenly across the United Kingdom. The greatest impact will be felt in the poorest communities still adjusting to the loss of jobs in mining and manufacturing. There is a risk of growing inequality. Traditional white collar jobs in accounting and law will be similarly affected.

The disruption will probably be enough to break the traditional life script of 20 years of education followed by 40 years of work and retirement. We need to prepare for a world in which this is no longer normal. We will need radical new ways of structuring support across the whole of society. Universal Basic Income or Universal Basic Services need to be actively explored. This will be the major economic challenge for government over the next decade.

New jobs and roles will be created in this fourth industrial revolution. The economic prosperity of the country will depend on how seriously we take investment in this area over the next five years. Other economies are making massive investment. The United Kingdom has some of the best research in the world but without continued investment and better education at all levels we will fall further behind the global leaders.

- Education is key to the future

STEM subjects and computer sciences are vital for everyone. But not to the exclusion of the humanities. We need to educate for the whole of life not simply train economic units of productivity. In a world which is uncertain what it means to be human, we need a fresh emphasis on ethics and values.

- Better data is key

There are two ingredients in the development of machine learning: computing power and good data. Government needs to support small and medium enterprises and start up businesses by making both more available: otherwise the major companies who are already ahead are likely to grow their advantage.

There are significant issues surrounding the security and quality of data, particularly in health care, but also huge advantages in making that data available. Some of the major benefits of AI to humanity are likely to come in better diagnosis of disease and in enhancement (not replacement) of treatments offered by practitioners. But the date needs to be of the highest quality to prevent bias creeping into the outcomes.

- Ethics needs to run through everything

AI brings immense potential for good but also significant potential for harm if used solely for profit and without though for the consequences. There are very obvious areas where AI can do immense damage: weaponisation; the sexualisation of machines and the acceleration of inequality.

The very best companies are highly ethical, publish codes of practice and are making a major contribution in this area. But statements of ethical intent, education for ethics and codes of good practice need to be universal.

- We need to grow the AI economy

New jobs and roles will be created in this fourth industrial revolution. The economic prosperity of the country will depend on how seriously we take investment in this area over the next five years. Other economies are making massive investment. The United Kingdom has some of the best research in the world but without continued investment and better education at all levels we will fall further behind the global leaders.

We have some of the best Universities and researchers in the world. But many businesses, branches of local and national government, services and charities have yet to make the transition to a digital economy which is a necessary first step to being AI ready.

- We need great leadership to shape the future

Leadership of developments in AI is currently dispersed and unclear. Developments in AI demand a sustained, coordinated response across government and wider society and clear, ethical leadership alert to both the dangers and the possibilities of AI.

* * * * * * * *

There are some key theological issues here. My list is growing but five stand out:

- What does it mean to be human?

Every advance in AI leads to deeper questions of humanity. As a Christian, I believe God became a human person in Jesus Christ. Our faith has profound things to say about human identity.

- What does it mean to be created and a creator?

A key part of being human from a Christian perspective is understanding that we are part of creation but with the power to create. We need to understand both our limits and our potential. AI encourages humanity to dream dreams but not always to set boundaries.

- Ethics needs to run through everything: truth

We need continually to emphasise the importance of truth, faithfulness, equality, respect for individuals, deep wisdom and the insights which come from human discourse and the whole ethical tradition, deeply rooted in Christianity and in other faiths.

- We need to be alert to increasing Inequality and poverty of opportunity.

The indications are already clear: without intervention, AI is more likely to increase inequality very significantly rather than decrease it. AI needs to be held within a vision for global economics and politics which is deeper and better than free market capitalism.

- There is immense potential for good in AI but also immense potential for harm.

Serious damage can result from the wrong use of data and lives can be distorted. Machines can and will be sexualised which will shape the humanity of those who use them. Weaponisation of AI requires very careful international debate and global restraint.

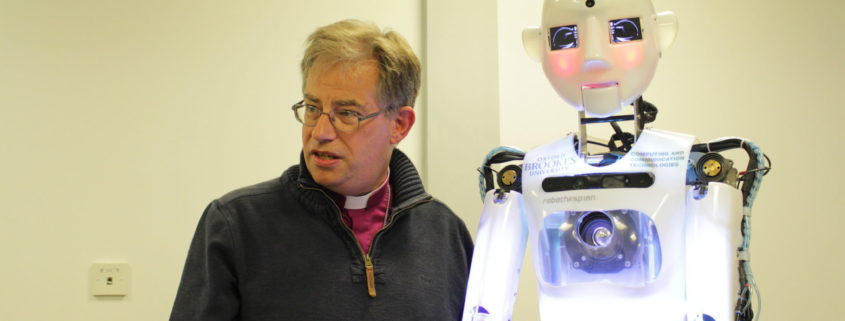

The one on the right is Artie.

Artie is a Robothespian. We met last week at Oxford Brookes University. Artie showed me some of his moves. He plays out scenes from Star Wars and Jaws with a range of voices, movements, gestures and special effects (including shark fins swimming across the screens which form his eyes).

Artie can’t yet hold an intelligent conversation but it won’t be long before his cousins and descendants can. Artificial Intelligence (AI) is now beginning to affect all of our lives.

Every time you search the internet or interact with your mobile phone or shop on a big store online, you are bumping into artificial intelligence. AI answers our questions through Siri (on the iPhone) or Alexa (on Amazon). AI matters in all kinds of ways.

I’ve been exploring Artificial Intelligence for some time now. In June I was appointed to sit on a new House of Lords Select Committee on AI as part of my work in the House of Lords. The Committee has a broad focus and is currently seeking evidence from a wide group of people and organisations. You can read about our brief here.

Here are just some of the reasons why all of this matters

Robot vacuum cleaners and personal privacy

A story in the Times caught my eye in July. It’s now possible to buy a robot vacuum cleaner to take the strain out of household chores. Perhaps you have one. The robot will use AI to navigate the best route round your living room. To do this it will make a map of your room using its onboard cameras. The cameras will then transmit the data back to the company who make the robot. They can sell the data on to well known on line retailers who can then email you with specific suggestions of cushion covers or lamps to match your furniture. All of this will be done with no human input whatsoever.

Personal boundaries and personal privacy matter. They are an essential part of our human identity and knowing who we are – and we are far more than consumers. This matters for all of us – but especially the young and the vulnerable. New technology means regulation on data protection needs to keep pace. The government announced its plans in August for a strengthening of UK protection law.

We need a greater level of education about AI and what it can do and is doing at every level in society – including schools. The technology can bring significant benefits but it can also disrupt our lives.

Self driving lorries and the future of work

AI will change the future of work. Yesterday the government announced the first trials of automatic lorry convoys on Britain’s roads.

AI will change the future of work. Yesterday the government announced the first trials of automatic lorry convoys on Britain’s roads.

Within a decade, the transport industry may have changed completely. There are great potential benefits. As a society we need to face the reality that work is changing and evolving.

AI is already beginning to change the medical profession, accountancy, law and banking. There is now an app which helps motorists challenge parking fines without the help of a lawyer (DoNotPay). It has been successfully used by 160,000 people and was developed by Joshua Bowder, a 20 year old whose mission in life is to put lawyers out of business through simple technology. The chat bot based App has already been extended to help the homeless and refugees access good legal advice for free.

Every development in Artificial Intelligence raises new questions about what it means to be human. According to Kevin Kelly, “We’ll spend the next three decades – indeed, perhaps the next century – in a permanent identity crisis, continually asking what humans are good for”[1].

As a Christian, I want to be part of that conversation. At the heart of our faith is the good news that God created the universe, that God loves the world and that God became human to restore us and show us what it means to live well and reach our full potential.

Direct messaging and political influence

The outcome of the last two US Presidential Elections has been shaped and influenced by AI: the side with the best social media campaigns won. Professor of Machine Learning, Pedro Domingos, describes the impact algorithm driven social media had on the Obama-Rooney campaign[2]. In his excellent documentary “Secrets of Silicon Valley” Jamie Bartlett explores the use of the same technology by the Trump Presidential campaign in 2016 which again led to victory in an otherwise close campaign.

There are signs that a similar use of social media with very detailed targeting of voters using AI was also used to good effect by Labour in the 2017 election.

In July six members of the House of Lords led by Lord Puttnam wrote to the Observer raising questions about the proposed takeover of Sky by Rupert Murdoch. In an open letter they argue, persuasively in my view, that this takeover gives a single company access to the personal data of over 13 million households: data which can then be used for micro ads and political campaigning.

The tools offered by AI are immensely powerful for shaping ideas and debate in our society. Christians need to be part of that dialogue, aware of what is happening and making a contribution for the sake of the common good.

Swarms and drones and the weaponisation of AI

Killer robots already exist in the form of autonomous sentry guns in South Korea. Many more are in development. On Monday 116 founders and leaders of robotics companies led by Elon Musk called on the United Nations to prevent a new arms race.

Killer robots already exist in the form of autonomous sentry guns in South Korea. Many more are in development. On Monday 116 founders and leaders of robotics companies led by Elon Musk called on the United Nations to prevent a new arms race.

Technology itself is a neutral thing but carries great power to affect lives for good or for ill. If there is to be a new arms race then we need a new public debate. The UK Government will need to take a view on the proliferation and use of weaponry powered by AI. The 2015 film Eye in the Sky starring Helen Mirren and directed by Gavin Hood is a powerful introduction to the ethical issues involved in remote weapons. Autonomous weapons raise a new and very present set of questions. How will the UK Government respond? Christians need a voice in that debate.

The Superintelligence: creating a new species

It’s a long way from robot vacuum cleaners to a superintelligence. At the moment, much artificial intelligence is “narrow”: we can create machines which are very good at particular tasks (such as beating a human at “Go”) but not machines which have broad general intelligence and consciousness. We have not yet created intelligent life.

But scientists think that day is not far away. Some are hopeful of the benefits of non human superintelligence. Some, including Stephen Hawking, are extremely cautious. But there is serious thinking happening already. Professor Nick Bostron is the Director of the Future of Humanity Institute in the University of Oxford. In his book, Superintelligence, he analyses the steps needed to develop superintelligence, the ways in which humanity may or may not be able to control what emerges and the kind of ethical thinking which is needed. “Human civilisation is at stake” according to Clive Cookson, who reviewed the book for the Financial Times[3].

The resources of our faith have much to say in all of this debate around AI: about fair access, privacy and personal identity, about persuasion in the political process, about what it means to be human, about the ethics of weaponisation and about the limits of human endeavour.

In the 19th Century and for much of the 20th Century, science asked hard questions of faith. Christians did not always respond well to those questions and to the evidence of reason. But in the 21st Century, faith needs to ask hard questions once again of science.

As Christians we need think seriously about these questions and engage in the debate. I’ll write more in the coming months as the work of the Select Committee moves forward.

[1] Kevin Kelly, The Inevitable: understanding the 12 technological forces that will shape our future, Penguin, 2016, p. 49

[2] Pedro Domingos, The Master Algorithm, How the quest for the ultimate learning machine will remake our world, Penguin, 2015, pp.16-19.

[3] Nick Bostron, Superintelligence: paths, dangers, strategies, Oxford, 2014